1.7 KiB

min(DALL·E)

This is a minimal implementation of DALL·E Mini. It has been stripped to the bare essentials necessary for doing inference, and converted to PyTorch. The only third party dependencies are numpy, torch, and flax. PyTorch inference with DALL·E Mega takes about 10 seconds in colab.

Setup

Run sh setup.sh to install dependencies and download pretrained models. The wandb python package is installed to download DALL·E mini and DALL·E mega. Alternatively, the models can be downloaded manually here:

VQGan,

DALL·E Mini,

DALL·E Mega

Usage

Use the python script image_from_text.py to generate images from the command line. Here are some examples runs:

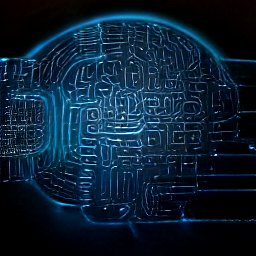

python3 image_from_text.py --text='artificial intelligence' --torch

python image_from_text.py --text='a comfy chair that looks like an avocado' --torch --mega --seed=10

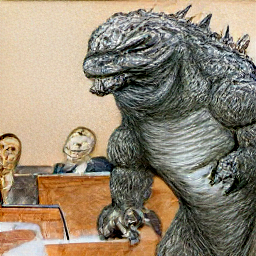

python image_from_text.py --text='court sketch of godzilla on trial' --mega --seed=100

Note: the command line script loads the models and parameters each time. The colab notebook demonstrates how to load the models once and run multiple times.