2.1 KiB

Vendored

2.1 KiB

Vendored

min(DALL·E)

This is a minimal implementation of Boris Dayma's DALL·E Mini in PyTorch. It has been stripped to the bare essentials necessary for doing inference. The only third party dependencies are numpy and torch.

It currently takes 7.4 seconds to generate an image with DALL·E Mega on a standard GPU runtime in Colab.

The flax model and code for converting it to torch can be found here.

Install

$ pip install min-dalle

Usage

Python

To load a model once and generate multiple times, first initialize MinDalleTorch.

from min_dalle import MinDalleTorch

model = MinDalleTorch(

is_mega=True,

is_reusable=True,

models_root='./pretrained'

)

The required models will be downloaded to models_root if they are not already there. After the model has loaded, call generate_image with some text and a seed as many times as you want.

text = "a comfy chair that looks like an avocado"

image = model.generate_image(text)

display(image)

text = "trail cam footage of gollum eating watermelon"

image = model.generate_image(text, seed=1)

display(image)

Command Line

Use image_from_text.py to generate images from the command line.

$ python image_from_text.py --text='artificial intelligence' --seed=7

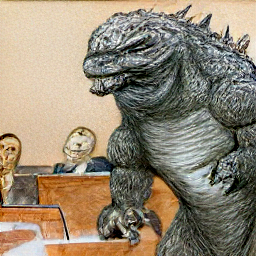

$ python image_from_text.py --text='court sketch of godzilla on trial' --mega